Turning Your Smartphone Into a Ruler: Camera and IMU Combine for Accurate Measurements Carnegie Mellon Researchers Say Wave of Hand Can Calibrate Images

Byron SpiceMonday, March 30, 2015Print this page.

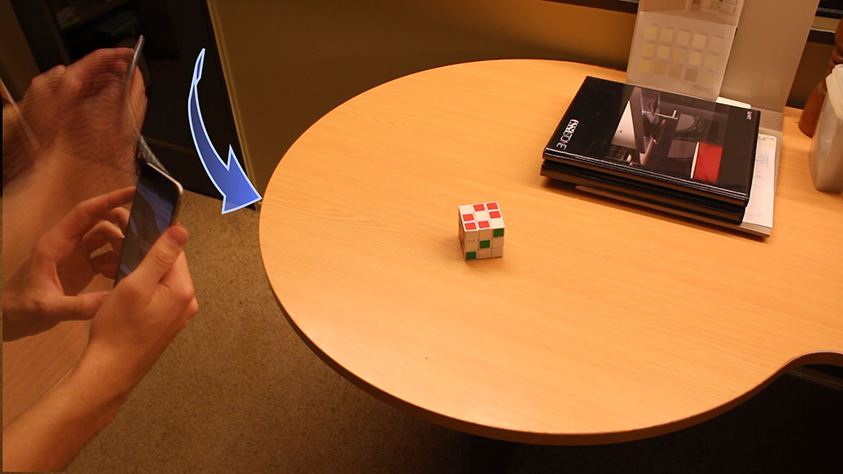

Researchers at Carnegie Mellon University have developed a way to use a smartphone's sensors to build 3-D models of faces or other objects and — literally, with the wave of a hand — provide accurate measurements of those objects.

Using video from smartphones or other devices to create 3-D models of the world has become increasingly common as computer vision has grown more sophisticated. Determining the scale of these models, however, has been problematic. CMU researchers were able to solve that problem by leveraging the cheap inertial measurement units (IMU) used to automatically switch a smartphone's screen orientation from portrait to landscape depending on how the user is holding it. (See the video below.)

The IMUs aren't very precise — they don't have to be to enhance the smartphone's user interactivity. But Simon Lucey, associate research professor in the Robotics Institute, said these "noisy" IMUs are good enough that, when the user waves the camera around a bit, they can calibrate 3-D models as they are created and enable the phone to get accurate measurements from the models.

"We've been able to get accuracies with cheap sensors that we hadn't imagined," Lucey said. "With a face tracker program, we are able to measure the distance between a person's pupils within half a millimeter." Such measurements would be useful for applications such as virtual shopping for eyeglass frames.

As computer vision advances, the technique conceivably could be used over larger areas, like modeling a room in 3-D to see if a couch or other piece of furniture would fit.

And though the technique does not yet operate in real time, it's possible that someday robots and self-driving cars might be able to use this low-energy passive perception to help navigate, rather than power-hungry active perception technologies, such as radars and laser rangefinders, that emit radiation, Lucey said.

Since the 1980s, when the Robotics Institute's Takeo Kanade pioneered a vision technique called "structure from motion," computer vision researchers have been able to build increasingly accurate 3-D models based on multiple 2-D images or video captured as the camera moves around an object or scene. The only problem with this purely visual approach to 3-D reconstruction is that it is ambiguous as to scale.

Lucey was part of an Australian research team that used this principle to develop an augmented-reality technology called Smart Fit, which created a 3-D model of a person's face so that person could virtually "try on" eyeglass frames. But determining the scale of the face model so the frame size could be properly adjusted required additional, error-prone steps, such as snapping a photo as the person stood in front of a mirror while holding a QR code.

"We figured there had to be a better way to do this," Lucey said.

Lucey and colleagues at the University of Queensland, Surya Singh and Kit Hamm, found that they could obtain the desired calibration by using the smartphone's IMU to track the camera's motion as it captures the imagery. They reported their findings last year at the European Conference on Computer Vision.

"The trajectory we create with these cheap IMUs will 'drift' over time, but the vision element we create is very accurate," Lucey said. "So we can use the 3-D model to correct for the errors caused by the IMU, even as we use the IMU to estimate the dimensions of the model."

This is in contrast to previous efforts in the robotics community to combine IMUs with imagery. Most of those applications have involved odometry or navigation, which requires IMUs that are accurate and therefore expensive.

"The amazing thing is that we can turn any smartphone into a ruler — no special hardware, no depth sensors, just your regular smart device," Lucey said.

As more smartphones incorporate high frame-rate video cameras, as has Apple's iPhone6, the accuracy of the technique will get even better.

"With a high frame-rate camera," he explained, "we can excite the IMU by moving the phone faster, without corrupting the images."

Byron Spice | 412-268-9068 | bspice@cs.cmu.edu